Visit

0 upvotes

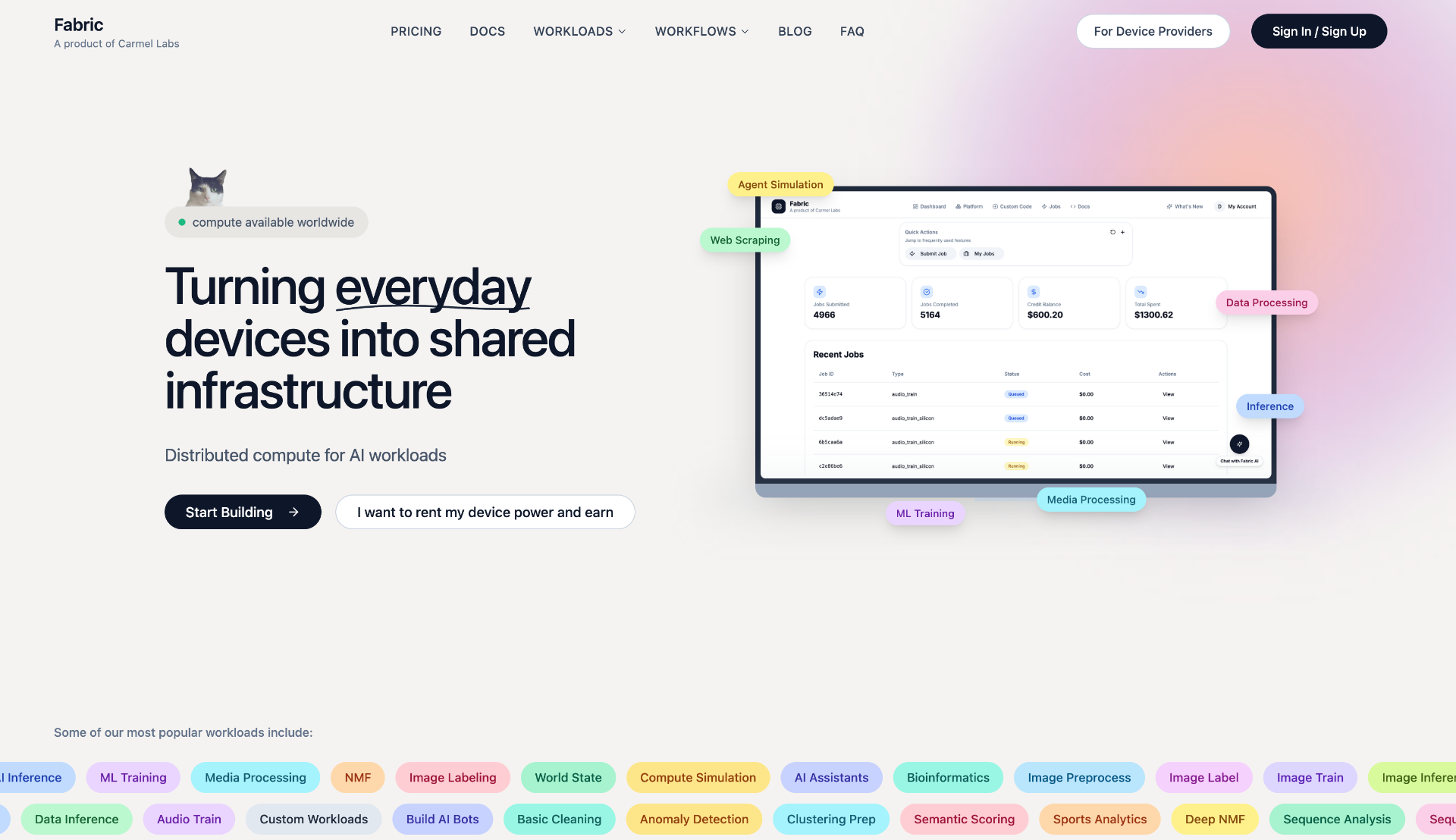

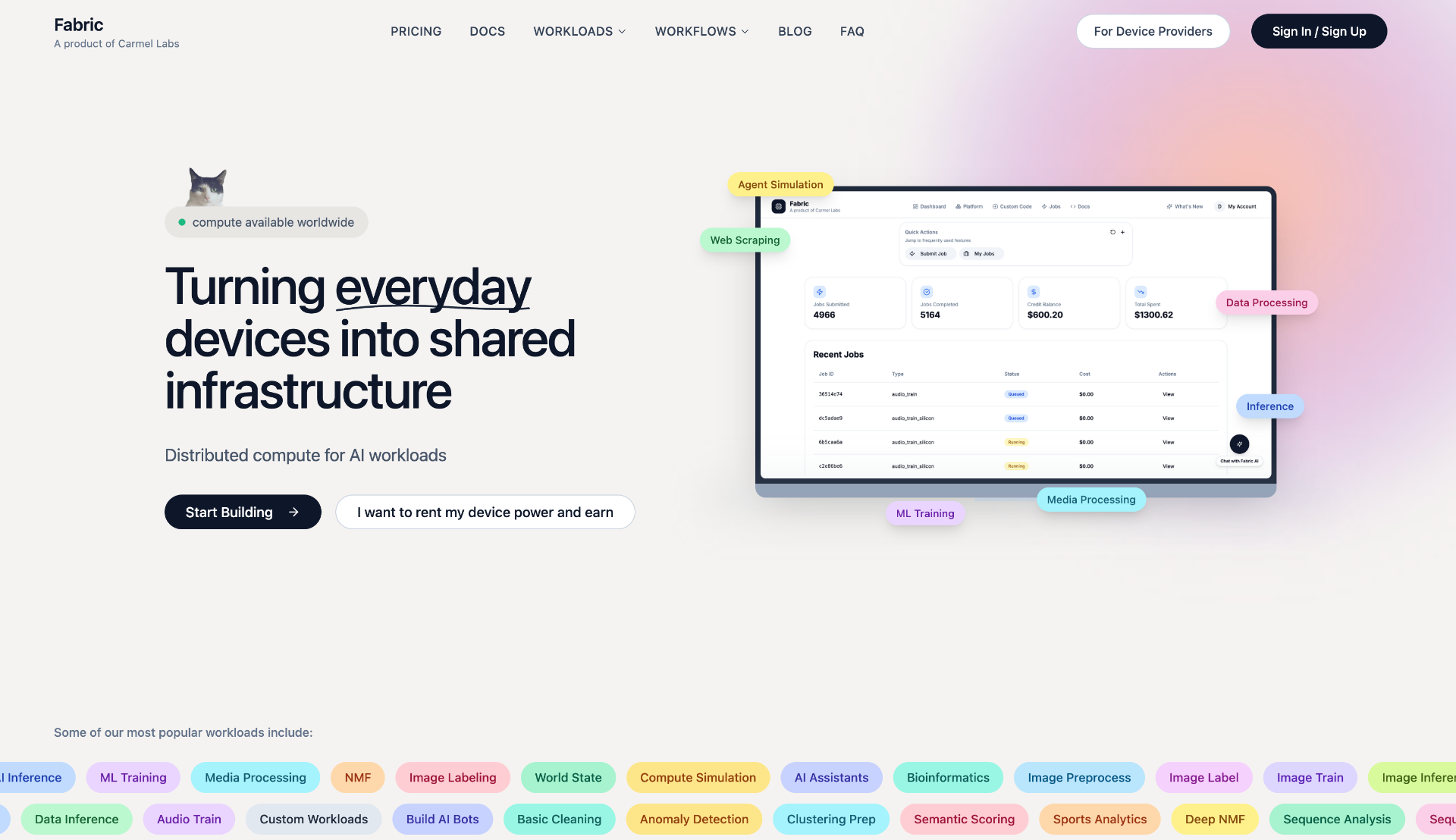

Fabric is a distributed compute platform for AI workloads that connects idle compute power worldwide. It enables users to run inference jobs at a fraction of traditional cloud costs while allowing device owners to earn passive income.

Fabric is a distributed compute network designed specifically for AI and data workloads. The platform connects idle compute power from devices worldwide with organizations and developers needing computational resources for various AI tasks.

Fabric supports numerous workloads including AI inference, ML training, data processing, web scraping, media processing, agent simulation, GitHub Actions runners, iOS builds, macOS builds, and custom containerized workloads. The platform handles embeddings, transcription, web scraping, ML training, CI/CD builds, and image processing. It also supports specialized workloads like bioinformatics, sequence analysis, variant analysis, sports analytics, and FASTQ processing.

The platform operates through a distributed architecture where workloads are submitted via API or dashboard. Fabric's orchestrator automatically splits and distributes work across the global network of devices. Users can deploy containerized workloads through the SDK or dashboard, and the system handles distribution, aggregation of results with full provenance and verification.

Fabric offers significant cost savings, being 80% cheaper than traditional cloud providers with no cold starts and autoretry capabilities. It provides enterprise security with end-to-end encryption and isolated sandboxed environments for secure workload execution. The global network ensures 24/7 availability with distributed processing that completes workloads 10x faster.

The platform targets developers who want to ship applications without managing infrastructure, replacing services like Lambda, SageMaker, Colab, and GitHub Actions. It's designed for teams working on AI projects, computer vision, data processing, and various computational workloads that require scalable, cost-effective computing resources.

Key Features

- •Distributed processing that completes workloads 10x faster than traditional cloud solutions

- •Enterprise security with end-to-end encryption and isolated sandboxed environments for secure workload execution

- •

Publisher

A

admin

Launch Date2026-02-14

Platformweb

Pricingpaid